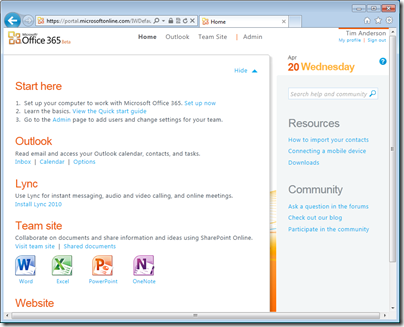

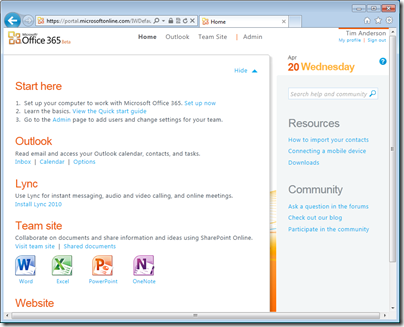

I have been trying Microsoft’s Office 365 which has recently gone into public beta, and is expected to go live later this year.

This cloud service provides Exchange 2010, SharePoint 2010 with Office Web Apps, and Lync Server to provide a complete collaboration service for organisations who prefer not to run these servers themselves – which is understandable give their cost and complexity.

Trying the beta is a little complex when you already have a working email and collaboration infrastructure. I chose to use a virtual machine running Windows 7 Professional. I also pre-installed Office 2010 Professional in an attempt to get the best experience.

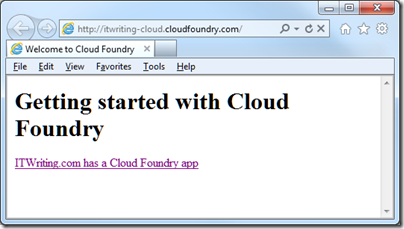

Initial sign-up is easy and I was soon online looking at the admin screen. I could also sign into Outlook Web Access and view my SharePoint site.

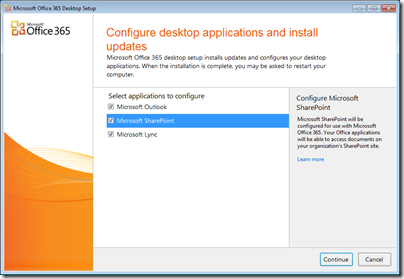

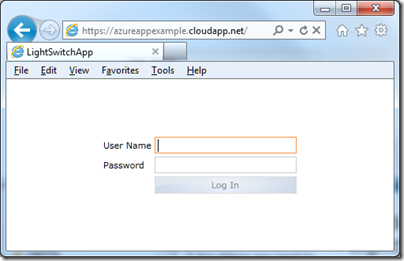

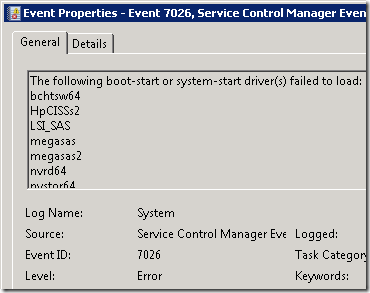

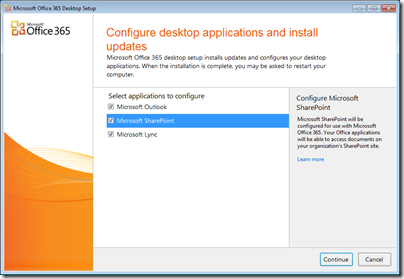

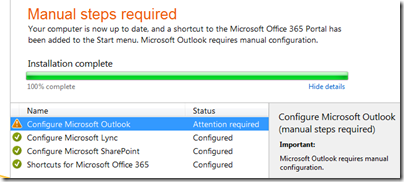

Hassles started when I clicked to setup up desktop applications. This is done by a helper application which configures and updates Outlook, SharePoint and Lync on your desktop PC. At this point I had not configured my own domain; I was simply username@username.onmicrosoft.com.

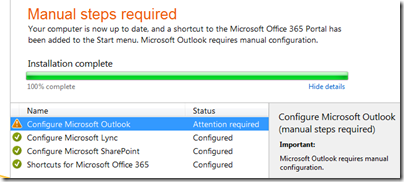

The wizard successfully configured SharePoint and Lync, but not Outlook.

There was a “Learn more” link; but I was in a maze of twisty passages, all alike, none of which seemed to lead to the information I needed.

Part of the problem – and I have noticed this with BPOS as well – is that the style of the online help is masterful at telling you things you know already, while neglecting to tell you what you need to know. It also has a patronising style that I find infuriating, and a habit of showing you marketing videos at every opportunity.

I did eventually find instructions for configuring Outlook manually for Office 365, but they did not work. I also noticed discrepancies in the instructions. For example, this document says that the Exchange server is ch1prd0201.mailbox.outlook.com and that the proxy server for Outlook over HTTP is pod51004.outlook.com. That did not match with the server given in my online account for IMAP, POP3 and SMTP use, which was a different podnnnnn.outlook.com. I tried all sorts of combinations and none worked.

Eventually I found this comment in another help document:

Currently, the only supported scenario for configuring Outlook to work with Office 365 is a fully migrated environment.

I am not sure if this is true, but it did seem to explain my problems. Of course it would be easy for Microsoft to surface this information in a more obvious place, such as building it into the setup wizard. Anyway, I decided to go for the full Office 365 experience and to set up a domain.

Fortunately I have a domain which I obtained for a bright idea that I have yet to find time for. I added it to Office 365. This is a process which involves first adding a CNAME record to the DNS in order to prove ownership, and then making Office 365 the authoritative nameserver for the domain. I was not impressed by the process, because when Microsoft took over the nameserver role it threw away existing settings. This means that if you have a web site or blog at that domain, for example, it will disappear from the internet after the transfer. Once transferred, you can reinstate custom records.

Still, I had chosen an unused domain so that I did not care about this. I set up a new user with an email address at the new domain, and I amended the default SharePoint web site address to use the domain as well.

That all worked fine; but what about Outlook? The bad news was that the setup wizard still failed to configure Outlook, and I still did not know the correct server settings.

I could have contacted support; but I had one last try. I went into the mail applet in control panel and deleted the Outlook profile, so Outlook had no profile at all. Then I ran Outlook, went through the setup wizard, and it all worked, using autodiscover. Out of interest, I then checked the server settings that the wizard had found, which were indeed different in every case from those in the various help documents I had seen.

A few hassles then, and I am not happy with the way this stuff is documented, but nevertheless it all looks good once set up. The latest Exchange and SharePoint does make a capable collaboration platform, the storage limits are generous – up to 25GB per Exchange mailbox – and I think it makes a lot of sense. I expect Microsoft’s online services to win huge amounts of business that is currently going to Small Business Server, and some business from larger organisations too. Migration from existing Microsoft-platform servers should be smooth.

The biggest disappointment so far is that in Lync online the Enterprise Voice feature is disabled. This means no general-purpose voice over IP, though you can call PC to PC. To get this you have to install Lync on-premise:

Organizations that want to leverage the full benefits of Microsoft Unified Communications can purchase and deploy Microsoft Lync Server 2010 on their premises as part of Microsoft Office 365. Lync Server 2010 on-premises delivers full enterprise voice and premises-based, dial-in audio conferencing, enabling customers to reduce costs and increase productivity by replacing or enhancing traditional PBX systems.

though it is confusing since Enterprise Voice is listed as a feature of the high-end E4 edition; I believe this implies an on-premise server alongside Office 365 in the cloud.

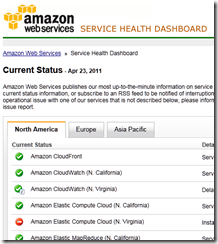

Perhaps the biggest question is the unknown: will Office 365 live up to its promised 99.9% scheduled uptime SLA, and how will its reliability compare to that of Google Apps?

Office 365 is priced at $10 per user per month for the basic service (E1), $16 to add Office Web Apps (E2), $24.00 to add licenses for Office Professional, archiving for Exchange (E3) and voicemail, and $27.00 to add Enterprise Voice (E4). The version in beta is E3.