Microsoft has released details of its StorSimple 8000 Series, the first major new release since it acquired the hybrid cloud storage appliance business back in late 2012.

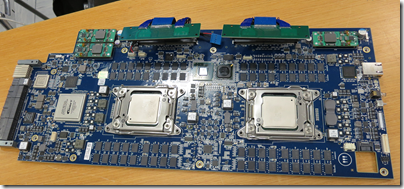

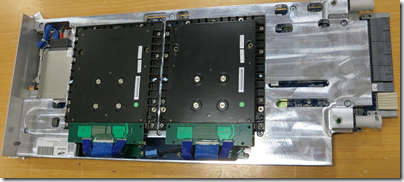

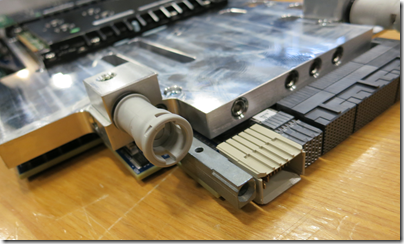

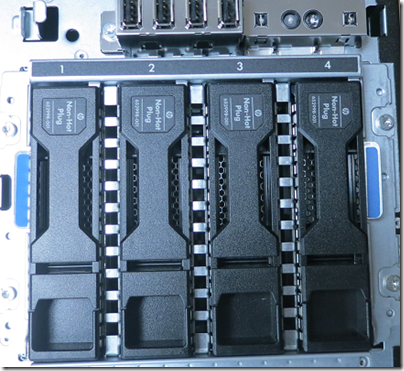

I first came across StorSimple at what proved to be the last MMS (Microsoft Management Summit) event last year. The concept is brilliant: present the network with infinitely expandable storage (in reality limited to 100TB – 500TB depending on model), storing the new and hot data locally for fast performance, and seamlessly migrating cold (ie rarely used) data to cloud storage. The appliance includes SSD as well as hard drive storage so you get a magical combination of low latency and huge capacity. Storage is presented using iSCSI. Data deduplication and compression increases effective capacity, and cloud connectivity also enables value-add services including cloud snaphots and disaster recovery.

The two new models are the 8100 and the 8600:

| 8100 | 8600 | |

| Usable local capacity | 15TB | 40TB |

| Usable SSD capacity | 800GB | 2TB |

| Effective local capacity | 15-75TB | 40-200TB |

| Maxiumum capacity including cloud storage |

200TB | 500TB |

| Price | $100,000 | $170,000 |

Of course there is more to the new models than bumped-up specs. The earlier StorSimple models supported both Amazon S3 (Simple Storage Service) and Microsoft Azure; the new models only Azure blob storage. VMWare VAAPI (VMware API for Array Integration) is still supported.

On the positive site, StorSimple is now backed by additional Azure services – note that these only work with the new 8000 series models, not with existing appliances.

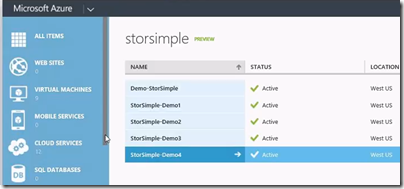

The Azure StoreSimple Manager lets you manage any number of StorSimple appliances from the Azure portal – note this is in the old Azure portal, not the new preview portal, which intrigues me.

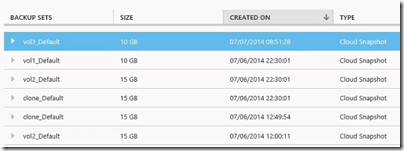

Backup snapshots mean you can go back in time in the event of corrupted or mistakenly deleted data.

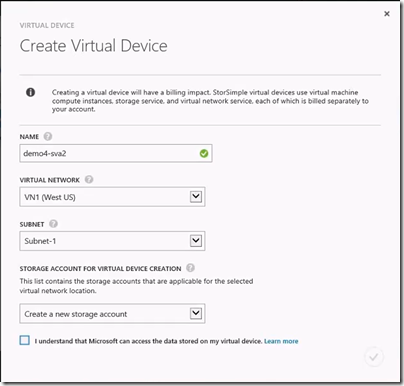

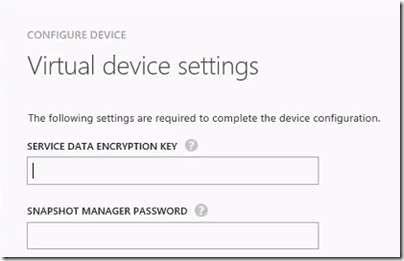

The Azure StorSimple Virtual Appliance has several roles. You can use it as a kind of reverse StorSimple; the virtual device is created in Azure at which point you can use it on-premise in the same way as other StorSimple-backed storage. Data is uploaded to Azure automatically. An advantage of this approach is if the on-premise StorSimple becomes unavailable, you can recreate the disk volume based on the same virtual device and point an application at it for near-instant recovery. Only a 5MB file needs to be downloaded to make all the data available; the actual data is then downloaded on demand. This is faster than other forms of recovery which rely on recovering all the data before applications can resume.

The alarming check box “I understand that Microsoft can access the data stored on my virtual device” was explained by Microsoft technical product manager Megan Liese as meaning simply that data is in Azure rather than on-premise but I have not seen similar warnings for other Azure data services, which is odd. Further to this topic, another journalist asked Marc Farley, also on the StorSimple team, whether you can mark data in standard StorSimple volumes not to be copied to Azure, for compliance or security reasons. “Not right now” was the answer, though it sounds as if this is under consideration. I am not sure how this would work within a volume, since it would break backup and data recovery, but it would make sense to be able to specify volumes that must remain always on-premise.

All data transfer between Azure and on-premise is encrypted, and the data is also encrypted at rest, using a service data encryption key which according to Farley is not stored or accessible by Microsoft.

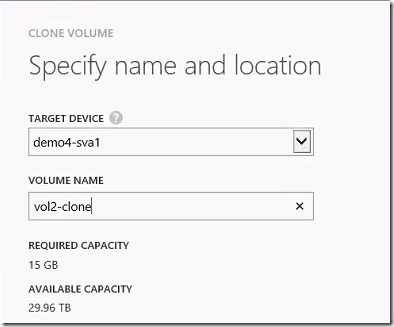

Another way to use a virtual appliance is to make a clone of on-premise data available, for tasks such as analysing historical data. The clone volume is based on the backup snapshot you select, and is disconnected from the live volume on which it is based.

StorSimple uses Azure blob storage but the pricing structure is different than standard blob storage; unfortunately I do not have details of this. You can access the data only through StorSimple volumes, since the data is stored using internal data objects that are StorSimple-specific. Data stored in Azure is redundant using the usual Azure “three copies” principal; I believe this includes geo-redundancy though this may be a customer option.

StorSimple appliances are made by Xyratex (which is being acquired by Seagate) and you can find specifications and price details on the Seagate StorSimple site, though we were also told that customers should contact their Microsoft account manager for details of complete packages. I also recommend the semi-official blog by a Microsoft technical solutions professional based in Sydney which has a ton of detailed information here.

StorSimple makes huge sense, but with 6 figure pricing this is an enterprise-only solution. How would it be, I muse, if the StorSimple software were adapted to run as a Windows service rather than only in an appliance, so that you could create volumes in Windows Server that use similar techniques to offer local storage that expands seamlessly into Azure? That also makes sense to me, though when I asked at a Microsoft Azure workshop about the possibility I was rewarded with blank looks; but who knows, they may know more than is currently being revealed.

![image_thumb[14] image_thumb[14]](http://www.itwriting.com/blog/wp-content/uploads/2013/03/image_thumb14_thumb.png)

![image_thumb[16] image_thumb[16]](http://www.itwriting.com/blog/wp-content/uploads/2013/03/image_thumb16_thumb.png)

![image_thumb[17] image_thumb[17]](http://www.itwriting.com/blog/wp-content/uploads/2013/03/image_thumb17_thumb.png)

![image_thumb[18] image_thumb[18]](http://www.itwriting.com/blog/wp-content/uploads/2013/03/image_thumb18_thumb.png)

![image_thumb[13] image_thumb[13]](http://www.itwriting.com/blog/wp-content/uploads/2013/03/image_thumb13_thumb.png)