I decided to install the open source Alternative PHP Cache on this server in order to improve performance. Interesting exercise. This server runs Debian Linux, and there are several ways to install APC:

1. Install the official package with apt-get install php-apc or similar

2. Install with the PHP Extension Community Library which goes something like:

apt-get install apache2

apt-get install libapache2-mod-php5

apt-get install php-pear

apt-get install php5-dev

apt-get install make

apt-get install apache2-prefork-dev

pecl install apc

The advantage over (1) is that you get the latest stable build, version 3.1.6, instead of the Debian package which is 3.0.19

3. Download the source and do something like this to install.

I started with option (2) though I came to regret it. The first problem is that the pecl installer will build with your currently-installed Apache, and if you later upgrade Apache it might break. Sticking with the official package is safer, even though it is very out of date.

I could live with the idea of re-installing APC every time Apache was updated if necessary, but I had another problem. I was up and running with APC 3.1.6 and pleased with the results, until after a while everything stopped working and my blog became a screen full of messages saying “Unable to allocate memory for pool”.

It looks like this bug, which was said to be fixed in version 3.1.5, but if you look to the end of the comments there is one from today with the same issue, and no suggestions about how to fix it.

The ancient version, on the other hand, has performed perfectly so far.

Another point of interest: I found it challenging to discover the best settings for APC. By default the install does no more than to enable the extension; but the default setting is unlikely to be the best one. The documentation tells you what each setting does, but not how to choose the best values for those settings. Should the cache be the default 32MB, or something much greater? Another thing to note: if you compile with MMAP support, which is the default, the value of apc.shm_segments is ignored, and the value in apc.shm_size will solely determine the size of the cache.

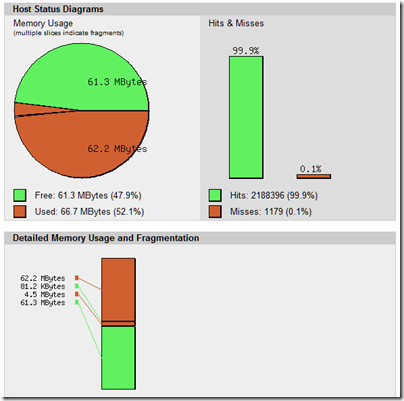

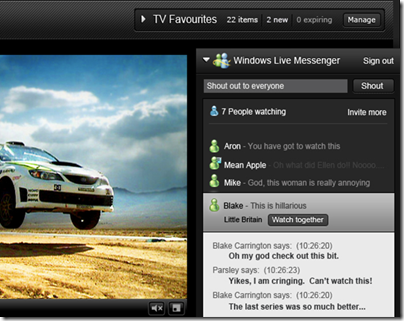

I found this Moodle article on installing APC in Windows helpful. What you do is first to find the file apc.php which the install put somewhere like /usr/share/doc/php-apc – in my case it was also compressed - and put this on your website, preferably in a password-protected folder. This tells you the status of the cache. The aim is to have the cache just big enough that it does not become full and highly fragmented. Here is what I get after a short run with 128MB, which may be a little too much:

Another tip is to set apc.stat to 0. This means APC will not check for changes in PHP files since they were last compiled and cached. The downside is that every time you change a file you have to restart the web server; but the benefit is better performance, which is the goal after all.