I’m just back from Microsoft’s Ignite event in Atlanta, Georgia, where around 23,000 attendees mostly in IT admin roles assembled to learn about the company’s platform.

There are always many different aspects to this type of event. The keynotes (there were two) are for news and marketing hype, while there is lots of solid technical content in the sessions, of which of course you can only attend a small fraction. There was also an impressive Expo at Ignite, well supported both by third parties and by Microsoft, though getting to it was a long walk and I fear some will never find it. If you go to one of these events, I recommend the Microsoft stands because there are normally some core team members hanging around each one and you can get excellent answers to questions as well as a chance to give them some feedback.

The high level story from Ignite is that the company is doing OK. The event was sold out and Corporate VP Brad Anderson assured me that many more tickets could have been sold, had the venue been bigger. The vibe was positive and it looks like Microsoft’s cloud transition is working, despite having to compete with Amazon on IaaS (Infrastructure as a service) and with Google on productivity and collaboration.

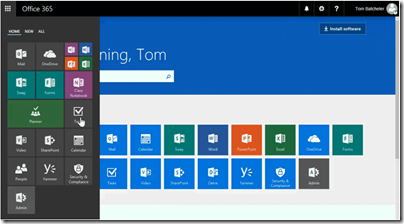

My theory here is that Microsoft’s cloud advantage is based on Office 365, of which the core product is hosted Exchange and the Office suite of applications licensed by subscription. The dominance of Exchange in business made the switch to Office 365 the obvious solution for many companies; as I noted in 2011, the reality is that many organisations are not ready to give up Word and Excel, Outlook and Active Directory. The move away from on-premises Exchange is also compelling, since running your own mail server is no fun, and at the small business end Microsoft has made it an expensive option following the demise of Small Business Server. Microsoft has also made Office 365 the best value option for businesses licensing desktop Office; in fact, I spoke to one attendee who is purchasing a large volume of Office 365 licenses purely for this reason, while still running Exchange on-premises. Office 365 lets users install Office on up to 5 PCs, Macs and mobile devices.

Office 365 is only the starting point of course. Once users are on Office 365 they are also on Azure Active Directory, which becomes a hugely useful single sign-on for cloud applications. Microsoft is now building a sophisticated security story around Azure AD. The company can also take advantage of the Office 365 customer base to sell related cloud services such as Dynamics CRM online. Integrating with Office 365 and/or Azure AD has also become a great opportunity for developers. If I had any kind of cloud-delivered business application, I would be working hard to get it into the Office Store and try to win a place on the newly refreshed Office App Launcher.

Office 365 users have had to put up with a certain amount of pain, mainly around the interaction between SharePoint online/OneDrive for Business and their local PC. There are signs that this is improving, and a key announcement made at Ignite by Jeff Teper is that SharePoint (which includes Team Sites) will be supported by the new generation sync client, which I hope means goodbye to the ever-problematic Groove client and a bit less confusion over competing OneDrive icons in the notification area.

A quick shout-out too for SharePoint Groups, despite its confusing name (how many different kinds of groups are there in Office 365?). Groups are ad-hoc collections of users which you set up for a project, department or role. Groups then have an automatic email distribution list, shared inbox, calendar, file library, OneNote notebook (a kind of Wiki) and a planning tool. Nothing you could not set up before, but packaged in a way that is easy to grasp. I was told that usage is soaring which does not surprise me.

I do not mean to diminish the importance of Azure, the cloud platform. Despite a few embarrassing outages, Microsoft has evolved the features of the service rapidly as well as building the necessary global infrastructure to support it. At Ignite, there were several announcements including new, more powerful virtual machines, IPv6 support, general availability of Azure DNS, faster networking up to an amazing 25 Gbps powered by FPGAs, and the public preview of a Web Application Firewall; the details are here:

My overall take on Azure? Microsoft has the physical infrastructure to compete with AWS though Amazon’s service is amazing, reliable and I suspect can be cheaper bearing in mind Amazon’s clever pricing options and lower price for application services like database management, message queuing, and so on. If you want to run Windows server and SQL server in the cloud Azure will likely be better value. Value is not everything though, and Microsoft has done a great job on making Azure accessible; with a developer hat on I love how easy it is to fire up VMs or deploy web applications via Visual Studio. Microsoft of course is busy building hooks to Azure into its products so that if you have System Center on-premises, for example, you will be constantly pushed towards Azure services (though note that the company has also added support for other public clouds in places).

There are some distinctive features in Microsoft’s cloud platform, not least the forthcoming Azure Stack, private cloud as an appliance.

I put “getting more like Google” in my headline, why is that? A couple of reasons. One is that CEO Satya Nadella focused his keynote on artificial intelligence (AI), which he described as “the ability to reason over large amounts of data and convert that into intelligence,” and then, “How we infuse every application, Cortana, Office 365, Dynamics 365 with intelligence.” He went on to describe Cortana (that personal agent that gets a bit in the way in Windows 10) as “the third run time … it’s what helps mediate the human computer interaction.” Cortana, he added, “knows you deeply. It knows your context, your family, your work. It knows the world. It is unbounded. In other words, it’s about you, it’s not about any one device. It goes wherever you go.”

I have heard this kind of speech before, but from Google’s Eric Schmidt rather than from Microsoft. While on the consumer side Google is better at making this work, there is an opportunity in a business context for Microsoft based on Office 365 and perhaps the forthcoming LinkedIn acquisition; but clearly both companies are going down the track of mining data in order to deliver more helpful and customized experiences.

It is also noticeable that Office 365 is now delivering increasing numbers of features that cannot be replicated on-premises, or that may come to on-premises one day but Office 365 users get them first. Further, Microsoft is putting significant effort into improving the in-browser experience, rather than pushing users towards Windows applications as you might have expected a few years back. It is cloud customers who are now getting the best from Microsoft.

While Microsoft is getting more like Google, I do not mean to say that it is like Google. The business model is different, with Microsoft’s based on paid licenses versus Google’s primarily advertising model. Microsoft straddles cloud and on-premises whereas Google has something close to a pure cloud play – there is Android, but that drives advertising and cloud services rather than being a profit centre in itself. And so on.

There were a couple more notable events during Nadella’s keynote.

Distinguished Engineer Doug Burger and one of Microsoft’s custom FPGA boards.

One was Distinguished Engineer Doug Burger’s demonstration of the power of FPGA boards which have been added to Azure servers, sitting between the servers and the network so they can operate in part independently from their hosts (see my short interview with Burger here).

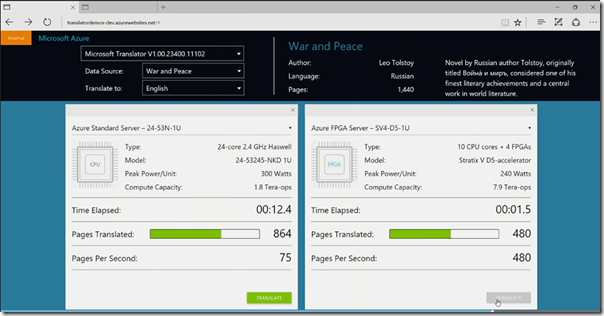

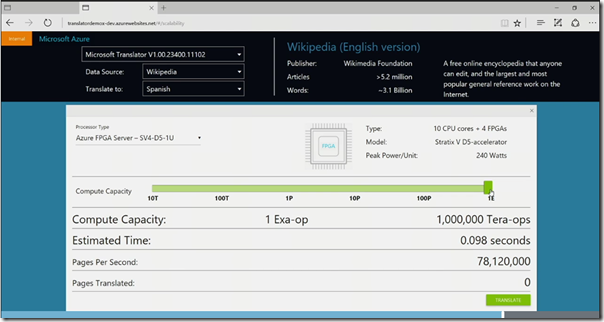

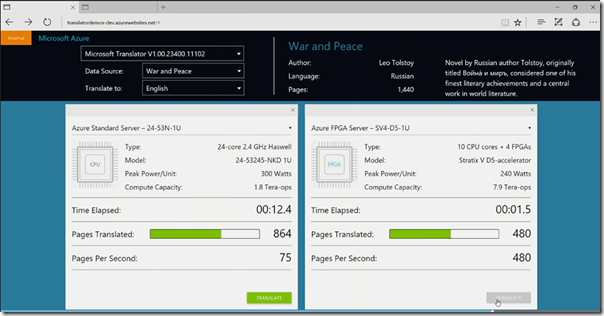

During the keynote, he gave what he called a “visual demo” of the impact of these FPGA accelerators on Azure’s processing power. First we saw accelerated image recognition. Then a translation example, using Tolstoy’s War and Peace as a demo:

The FPGA-enabled server consumed less power but performed the translation 8 times faster. The best was to come though. What about translating the whole of English Wikipedia? “I’ll show you what would happen if we were to throw most of our existing global deployment at it,” said Burger.

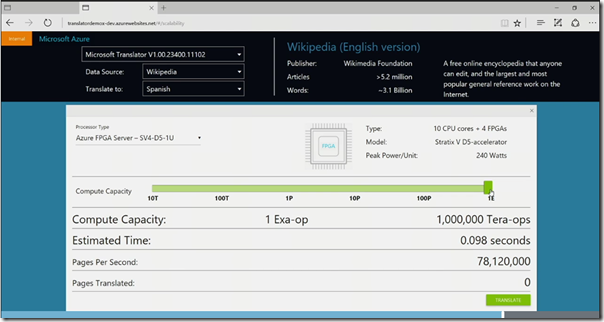

“Less than a tenth of a second” was the answer. Looking at that screen showing 1 Exa-op felt like being present at the beginning of a computing revolution. As the Top500 supercomputing site observes, “the fact the Microsoft has essentially built the world’s first exascale computer is quite an achievement.” Exascale is a billion billion operations per second.

However, did we see Wikipedia translated, or just an animation? Bearing in mind first, that Burger spoke of “what would happen”, and second, that the screen says “Estimated time”, and third, that the design of Azure’s FPGA network (as I understand it) means that utilising it could impact other users of the service (since all network traffic to the hosts goes through these boards), it seems that we saw a projected result and not an actual result – which means we should be sceptical about whether this would actually work as advertised, though it remains amazing.

One more puzzle before I wrap up. Adobe CEO Shantanu Narayen appeared on stage with Nadella, in the morning keynote, to announce that Adobe will make Azure its “preferred cloud.” This appears to include moving Adobe’s core cloud services from Amazon Web Services, where they currently run, to Azure. Narayen:

“we’re thrilled and excited to be announcing that we are going to be delivering all of our clouds, the Adobe Document Cloud, the Marketing Cloud and the Creative Cloud, on Azure, and it’s going to be our preferred way of bringing all of this innovation to market.”

Narayen said that Adobe’s decision was based on Microsoft’s work in machine learning and intelligence. He also looked forward to integrating with Dynamics CRM for “one unified and integrated sales and marketing service.”

This seems to me interesting in all sorts of ways, not only as a coup for Microsoft’s cloud platform versus AWS, but also as a case study in migrating cloud services from one public cloud to another. But what exactly is Adobe doing? I received the following statement from an AWS spokesperson:

“We have a significant, long-term relationship and agreement with Adobe that hasn’t changed. Their customers will want to use AWS, and they’re committed to continuing to make that easy.”

It does seem strange to me that Adobe would want to move such a significant cloud deployment, that as far as I know works well. I am trying to find out more.