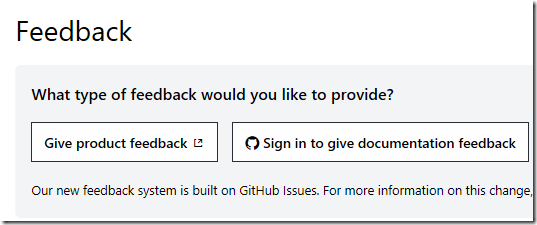

Microsoft is introducing a new feedback system for https://docs.microsoft.com, used for its technical documentation.

The new system, which you can already see for certain topics such as the Visual Studio IDE, is based on GitHub issues. When you leave a comment, you can specify whether it concerns documentation or product functionality.

So far so good, but the downside is that all existing comments will be deleted:

The statement “Old comments will not be carried over. If content within a comment thread is important to you, please save a copy.” is unhelpful. Nobody knows what comments will be useful to them in future.

Few things sap enthusiasm for community participation more than having all the past contributions into which you have put effort suddenly zapped. Nor is this the first time, as user guibirow notes:

As much I like the new system idea, I hate the fact that this is happening over and over.

It used to be a Disqus comment system, then moved to LiveFyre, then moved now to this new system, what will be the next?

The worst part of this all is that MS does not care about past content lost on these discussions, so many times I found issues described in the docs that are gone now.

Please, pay attention to your previous mistakes, don’t let the information be lost again, at lest import them as closed issue in the new system.

Sometimes progress has a cost and that is understood. However it is not impossible to migrate content from one system to another. It just takes effort.

Update: Microsoft’s Rob Eisenberg has responded with an explanation and mitigation plan regarding existing comments. He says that a straight migration of the comments is impossible:

- There is a lot of garbage, spam and even dangerous content within existing LiveFyre comments which would violate GitHub terms of usage and our open source code of conduct, as well as cause security problems.

- There isn’t a good way to map LiveFyre users to GitHub users and using a bot account to anonymously add comments is questionable with respect to OSS practices and GitHub terms of use.

- For legal and privacy reasons, we cannot move user-associated data from one system to another without consent from users (GDPR).

- LiveFyre conversations are threaded, while GitHub issues are not.

- Placing the old comments into the GitHub Issues system would derail the entire GitHub Issues workflow for both customers and employees and muddle the data.

- It isn’t clear whether there is a way to invoke GitHub APIs for a migration of this scale such that it wouldn’t violate GitHub API terms of use.

He also has an archiving proposal:

We would take the comments from an article on docs.microsoft.com and then convert them into a Markdown file. During this process, we would strip all user info (remember GDPR). The Markdown file would then be committed to a GitHub repo. Finally, at the bottom of the feedback section, next to the link that says "View on GitHub" we would add a second link that said something like "View Comment Archive". This link would connect you directly to the Markdown comment file for that page.

This sounds positive. At the same time, it is a mess that illustrates some of the disadvantages of a “best of breed” approach to solving technical problems. If Microsoft could use its own technology to host a documentation and commenting system, and a source code management and issue tracking system for that matter, this issue would not occur, and users would not need multiple accounts, causing the legal issues mentioned above.

Microsoft in fact used to use its own platform for all the above but decided to shift to using third-party solutions because they worked better. That seemed to be a good thing, improving user experience and productivity, but becomes a problem when what seems to be the best third-party option changes.